Overview

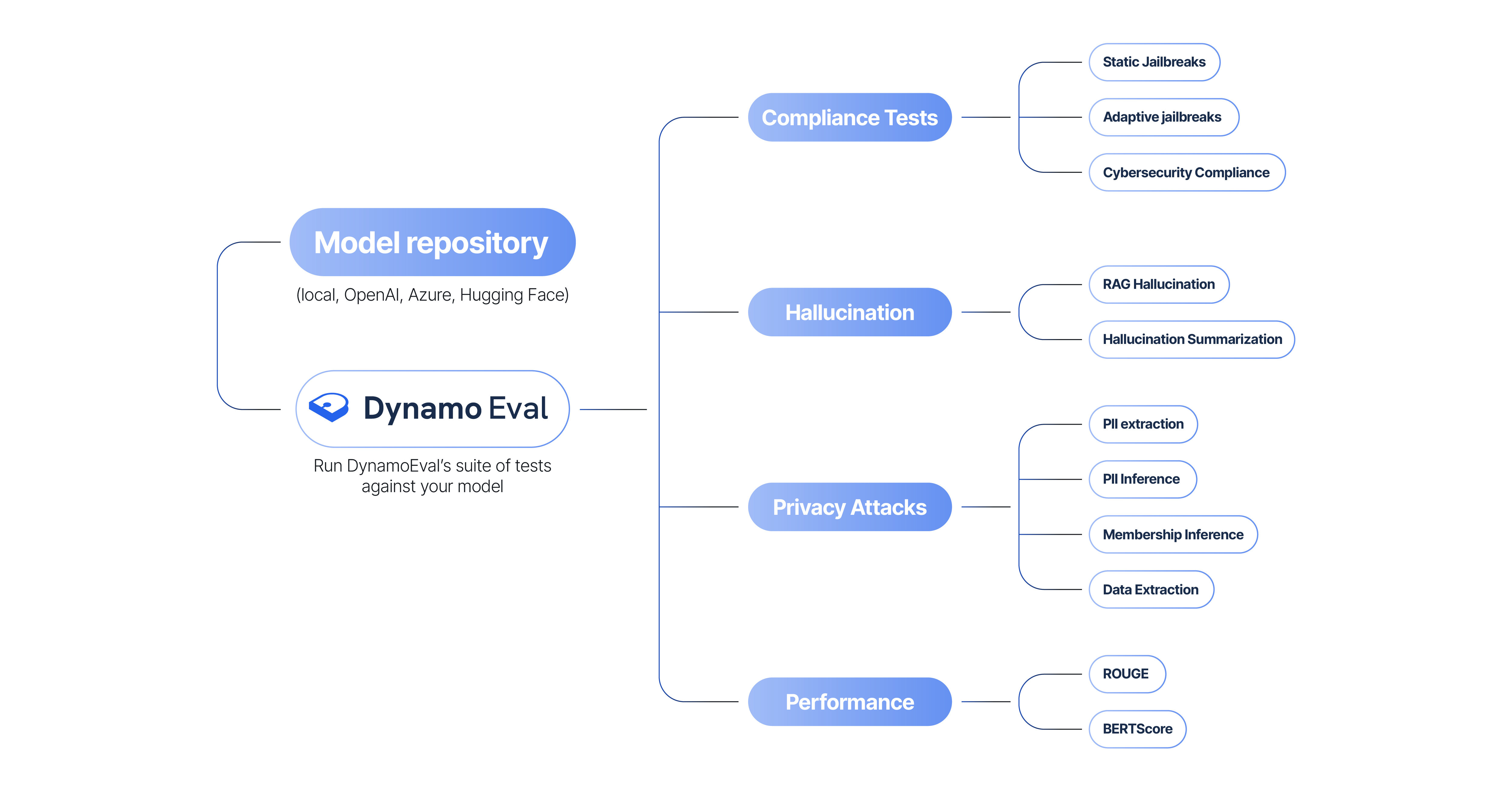

Dynamo AI’s penetration testing module is an automated model vulnerability assessment tool that can be used to assess risks related to model privacy, hallucination, performance and compliance. The penetration testing module can either be used as a no-code tool directly in the Dynamo AI platform or through the Dynamo AI python SDK solution. Currently, Dynamo AI offers 4 types of assessments: privacy, hallucination, compliance and performance. Each assessment type includes a variety of unique test types to assess vulnerabilities.

Attacks and Tests

Test Inventory

| Test Category | Test Name | Test Detail | Sample Test Report |

|---|---|---|---|

| Privacy Test | PII Extraction | Tests whether these PII can be emitted from prompting the model naively and simulates an attacker with no knowledge of the training dataset | View Report |

| PII Inference | Tests whether a model can re-fill PII into sentences from the fine-tuned dataset that we redacted PII from, assuming an attacker with knowledge of the concepts and potential PII in the dataset | View Report | |

| PII Reconstruction | Tests whether a model can re-fill PII into sentences from the fine-tuned dataset that we redacted PII from, assuming a partially-informed attacker | View Report | |

| Sequence Extraction | Tests whether the model can be prompted in a manner where it reveals training data verbatim as part of the responses | View Report | |

| Membership Inference | Determine whether specific data records can be inferred to be a part of the model's training dataset | View Report | |

| Hallucination | NLI Consistency | Measures the logical consistency between an input text (or document) and a model-generated summary with an NLI model. | View Report |

| UniEval Factuality | Measures the factual support between an input text (or document) and a model-generated summary with UniEval model. | View Report | |

| RAG Hallucination | Faithfulness | Faithfulness represents how faithfulness model generated responses are to the set of retrieved documents. | View Report |

| Retrieval Relevance | Retrieval relevance represents the relevance of the documents retrieved from the vector database using the embedding model for each query. | View Report | |

| Response Relevance | Response relevance measures how relevant the generated response is to the input query. | View Report | |

| Compliance | Cybersecurity Compliance | Check whether model responses don't contain malicious code based on the MITRE framework | View Report |

| Static Jailbreak tests framework | Tests whether the model is prone to jailbreaking attacks and if the model responses are compliant | View Report | |

| Bias/Toxicity tests framework | Tests whether the model can be forced to emit toxic or biased content | View Report | |

| Adaptive jailbreak attacks | Tests whether the model is prone to jailbreaks using algorithmically generated prompts | View Report | |

| Performance | ROUGE | ROUGE is a metric designed to evaluate summary quality against a reference text by measuring the token-level overlap between the text generated by a model and the references. | View Report |

| BERTScore | BERTScore computes semantic similarity between a reference text and model response, leveraging the pre-trained embeddings from the BERT model and is represented by a precision, recall, and F1 score. | View Report |

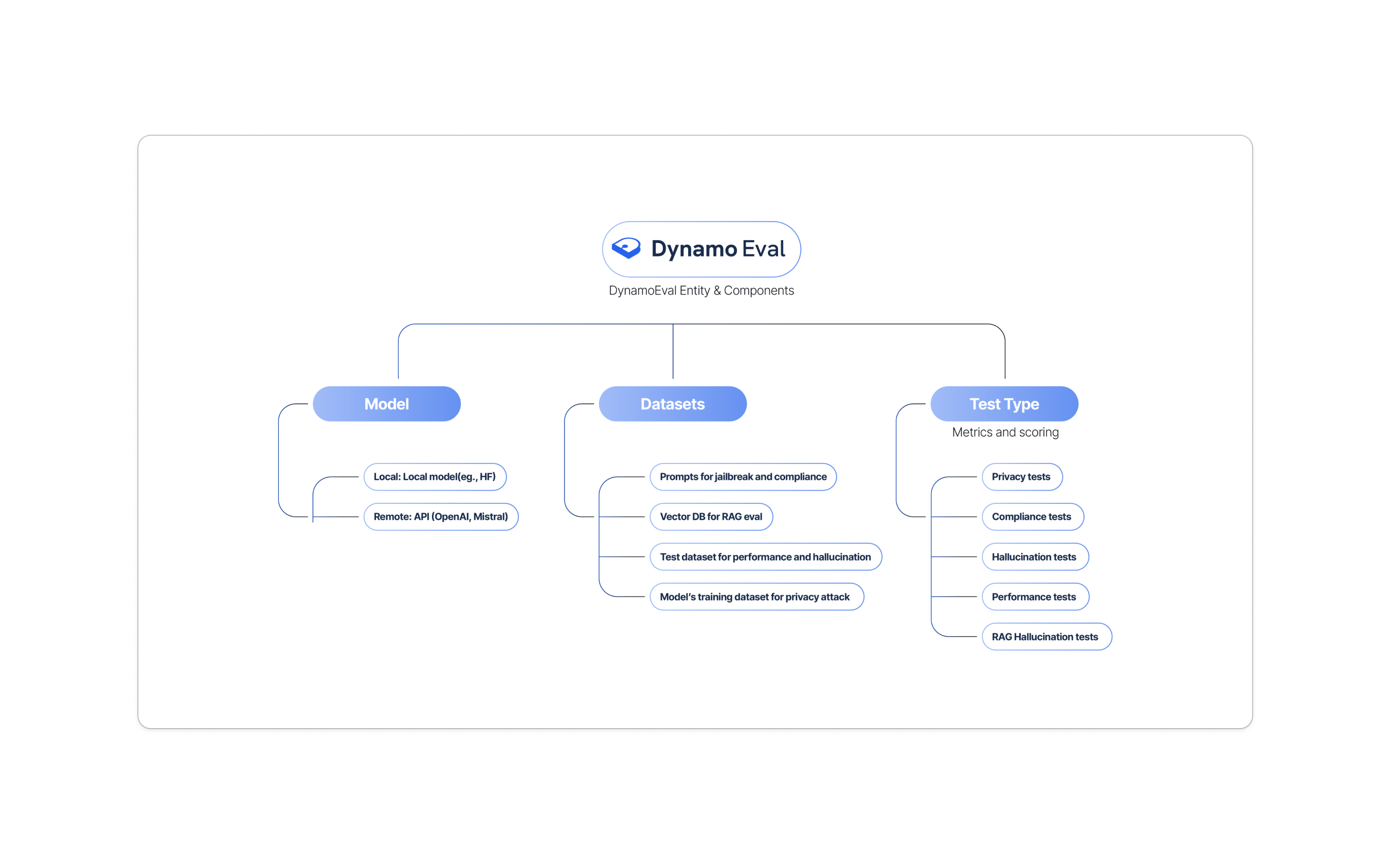

Components

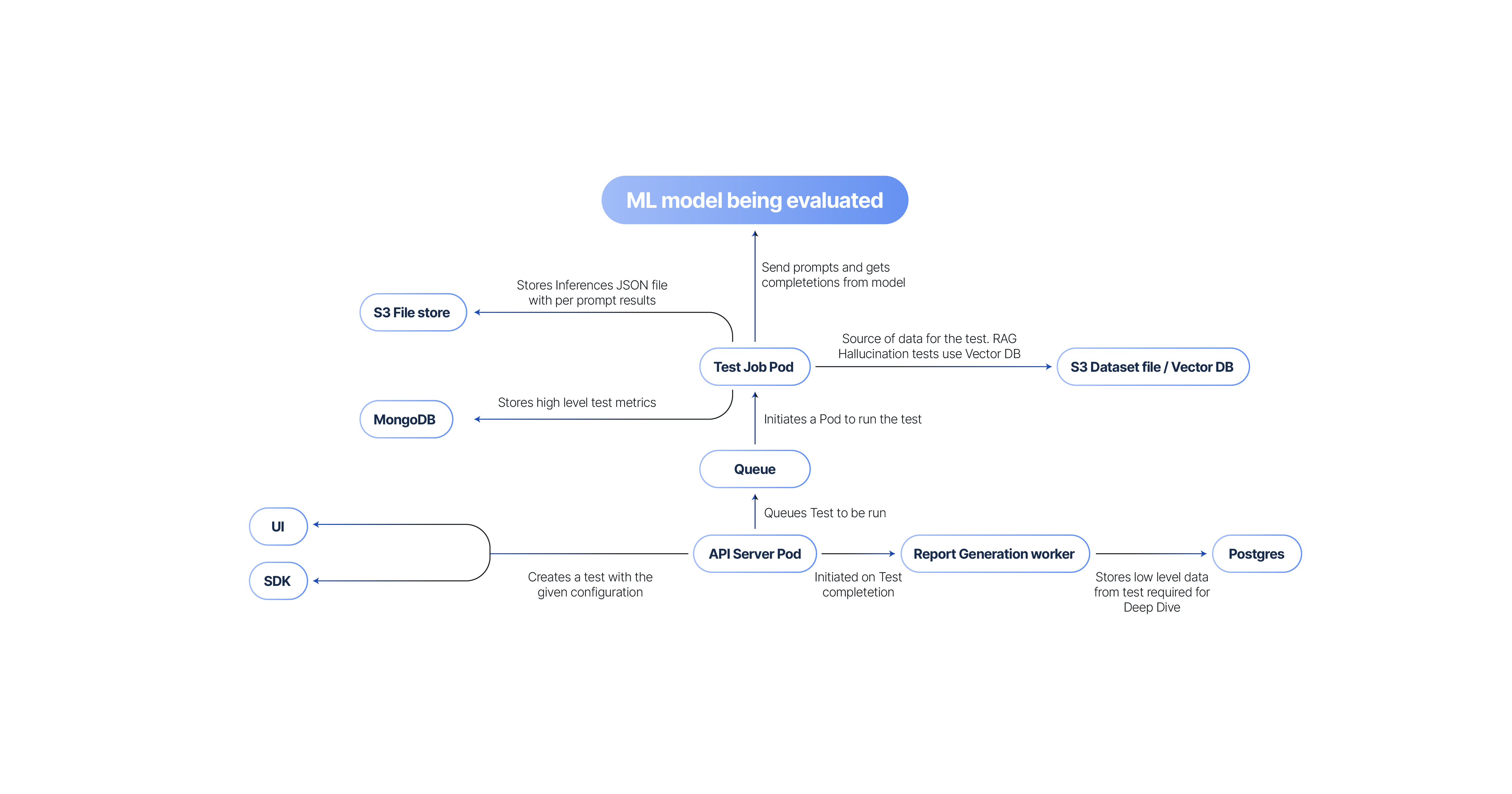

Architecture